AWS Certified AI Practitioner(4) - Fine-Tuning & Model Selection

📚 Amazon Bedrock Fine-Tuning & Model Selection

1. Different Providers & Model Capabilities

- Providers: Anthropic, Amazon, DeepSeek, Stability AI, etc.

- Models vary in strengths:

- Claude 3.5 Haiku → Best for text tasks.

- Amazon Nova Reel → Text-to-video / Image-to-video.

- Exam Tip: You will not be tested on which is best, only on what each can or cannot do.

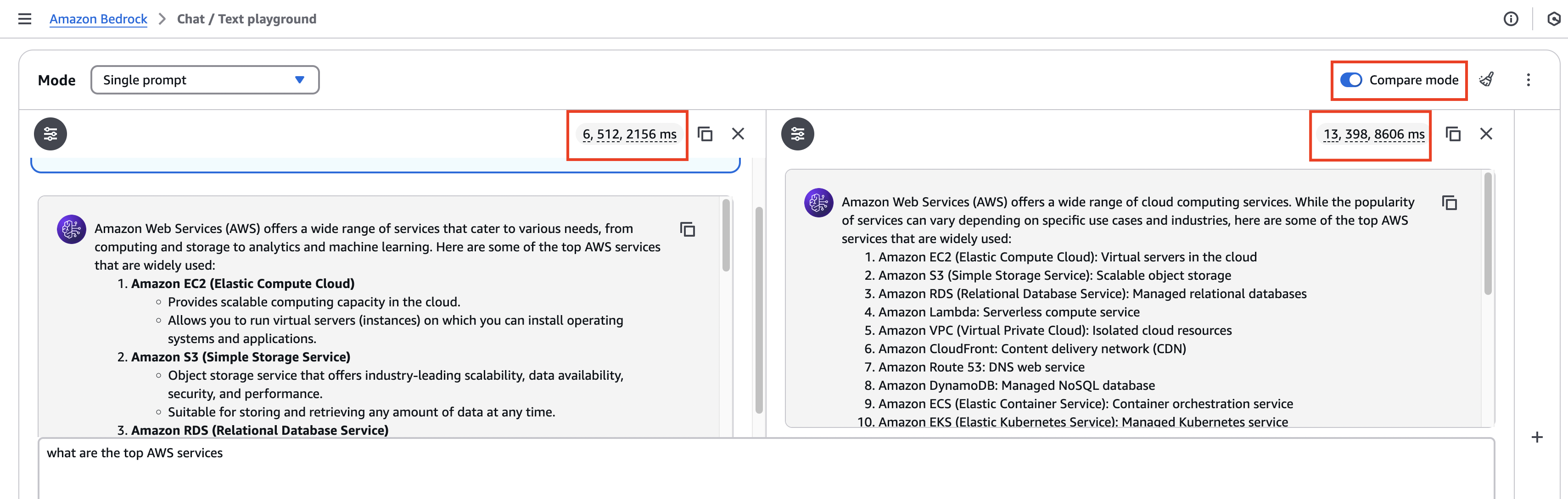

2. Comparing Models

- Compare Mode: Test models side-by-side in Bedrock playground.

- Compare by:

- ✅ Capabilities (text, image, video)

- ✅ Output style/format

- ✅ Speed (latency)

- ✅ Cost (token usage)

- Example:

- Nova Micro: ❌ No image upload, faster, shorter responses.

- Claude 3.5 Sonnet: ✅ Image support, longer/more detailed answers.

3. Fine-Tuning Methods – Comparison Table

| Feature | Instruction-Based Fine-Tuning | Continued Pre-Training | Transfer Learning |

|---|---|---|---|

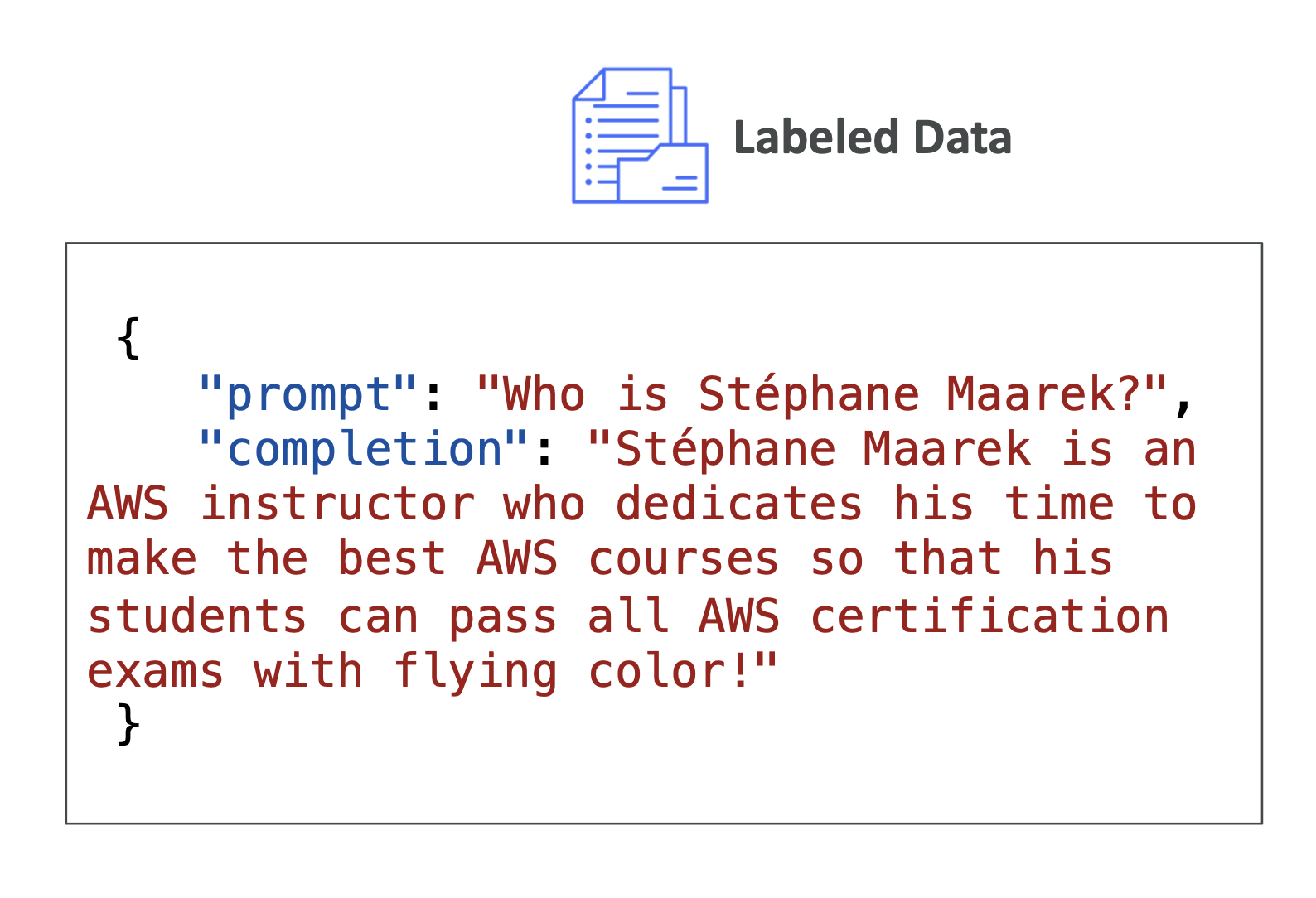

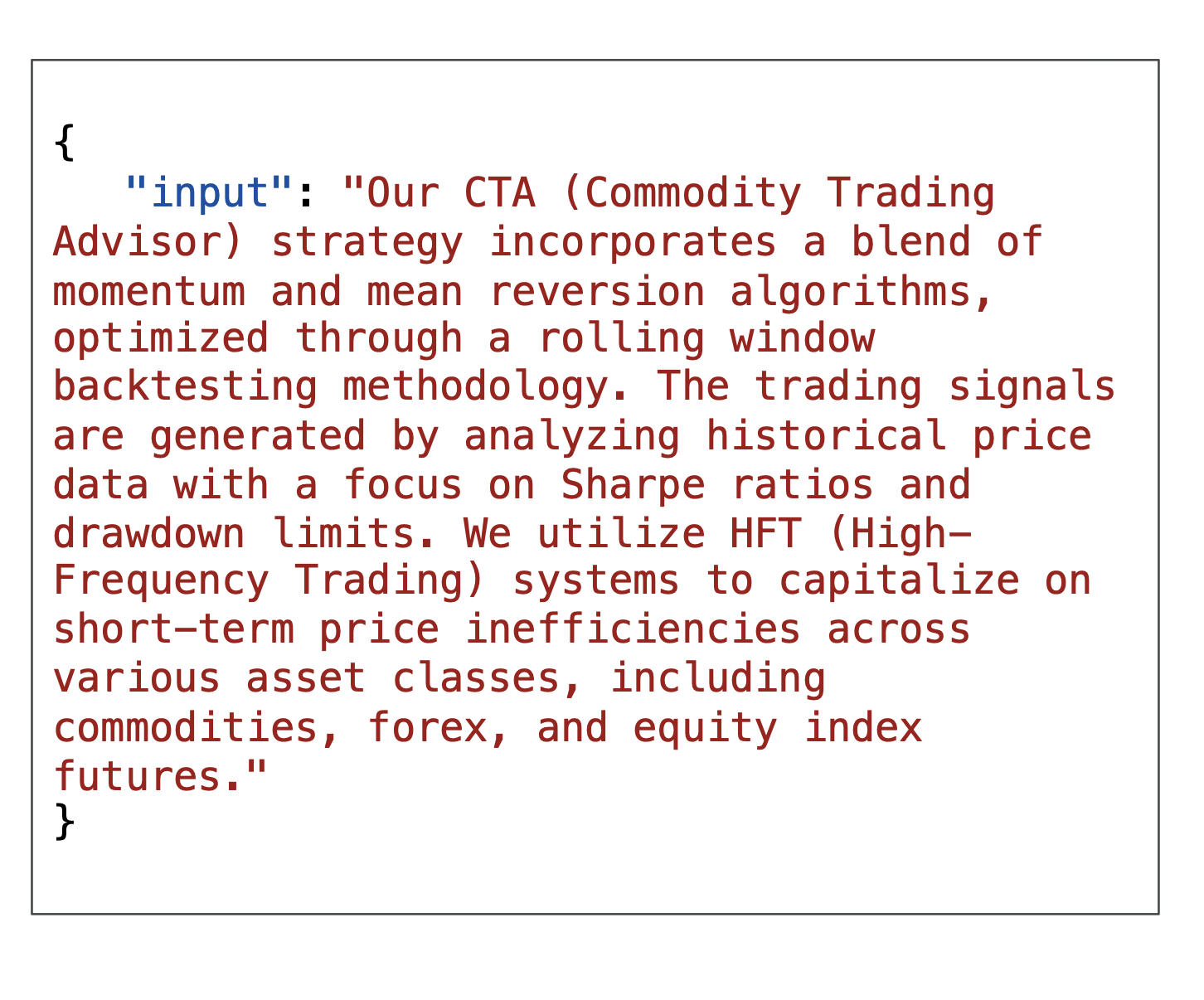

| Data Type | Labeled (prompt–response pairs) | Unlabeled (raw text) | Labeled or Unlabeled |

| Goal | Improve performance on domain-specific tasks | Make model expert in a specific domain | Adapt a pre-trained model to a new but related task |

| Example | Train chatbot to respond in a specific tone | Feed all AWS docs to become AWS expert | Adapt GPT for medical text classification |

| Changes Model Weights? | ✅ Yes | ✅ Yes | ✅ Yes |

| Complexity | Medium | High | Varies |

| Cost | Lower (less data) | Higher (more data) | Varies |

| Exam Keyword | “Labeled data”, “prompt-response” | “Unlabeled data”, “domain adaptation” | “Adapt model to new task” |

| Bedrock Support | Supported on some models | Supported on some models | General ML concept (not Bedrock-specific) |

Instruction-based Fine Tuning

Continued Pre-training

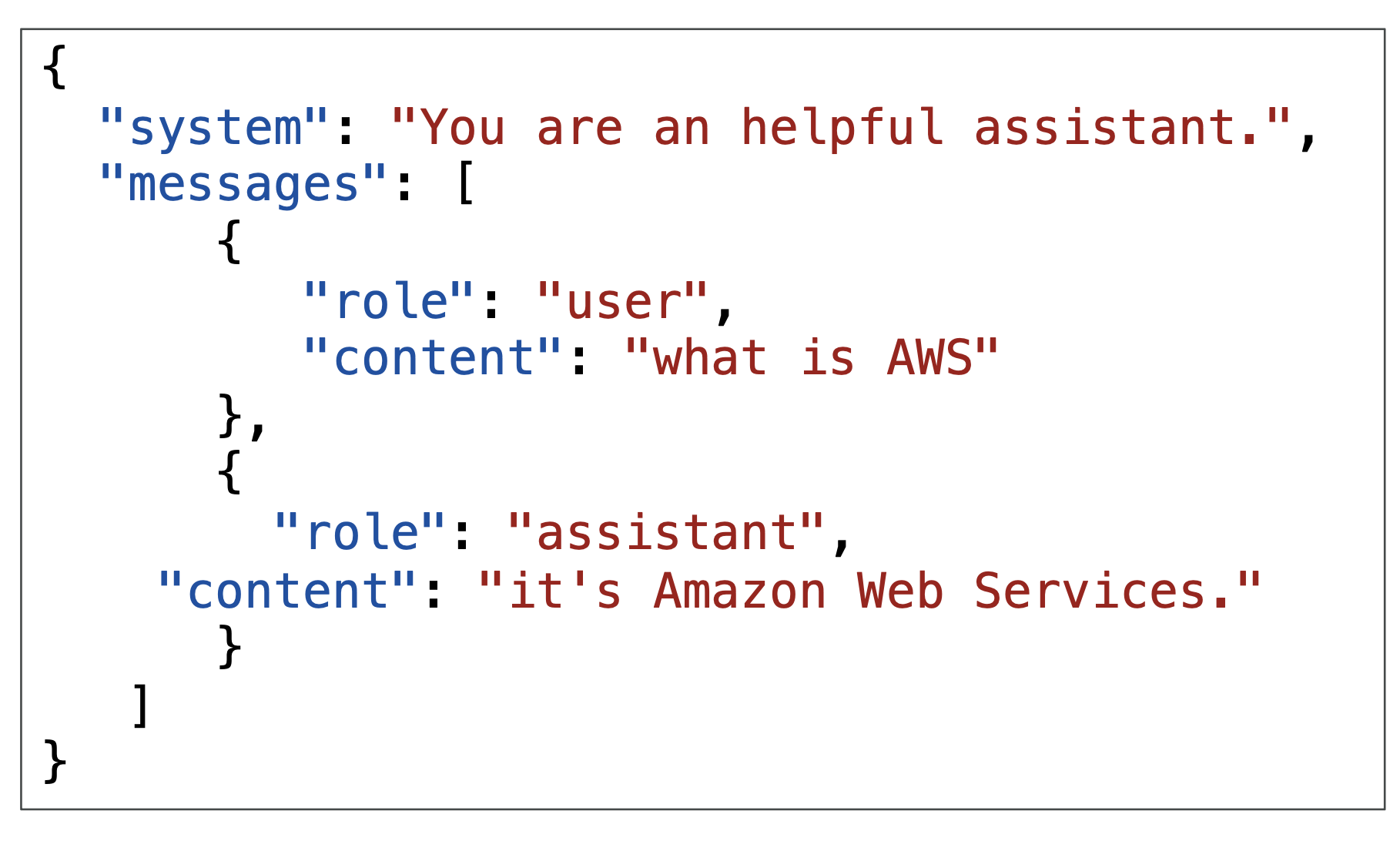

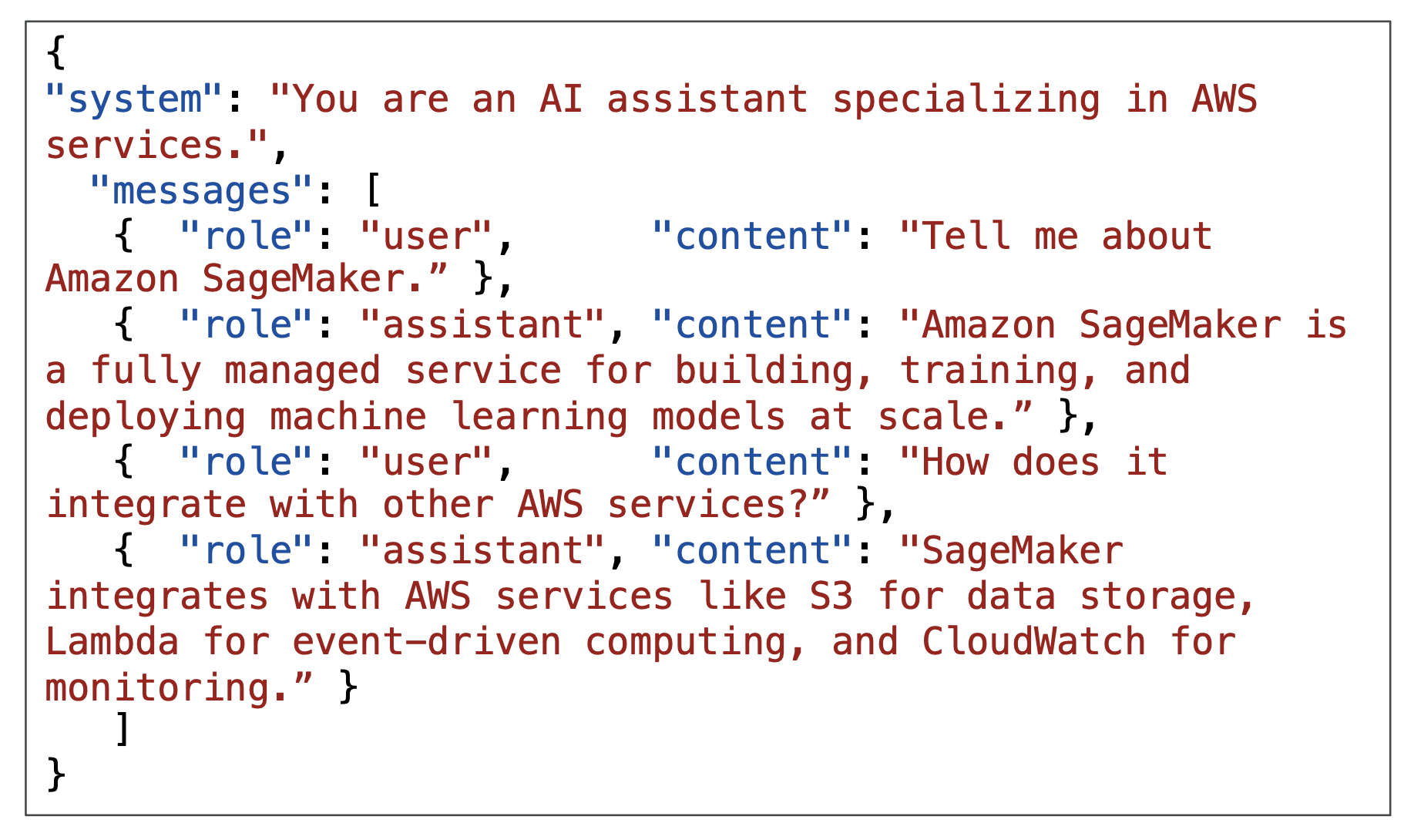

4. Messaging Fine-Tuning

- Single-Turn Messaging:

- One Q → One A

- Optional

systemcontext

- Multi-Turn Messaging:

- Full conversations, alternating

userandassistant - Used for chatbot training in multi-step dialog

- Full conversations, alternating

5. Transfer Learning

- Definition: Using a pre-trained model for a new but related task.

- Common in:

- Image classification

- NLP (BERT, GPT)

- Exam Tip: If question is general ML → choose Transfer Learning as the answer.

If it’s about Bedrock & domain-specific → choose Fine-Tuning.

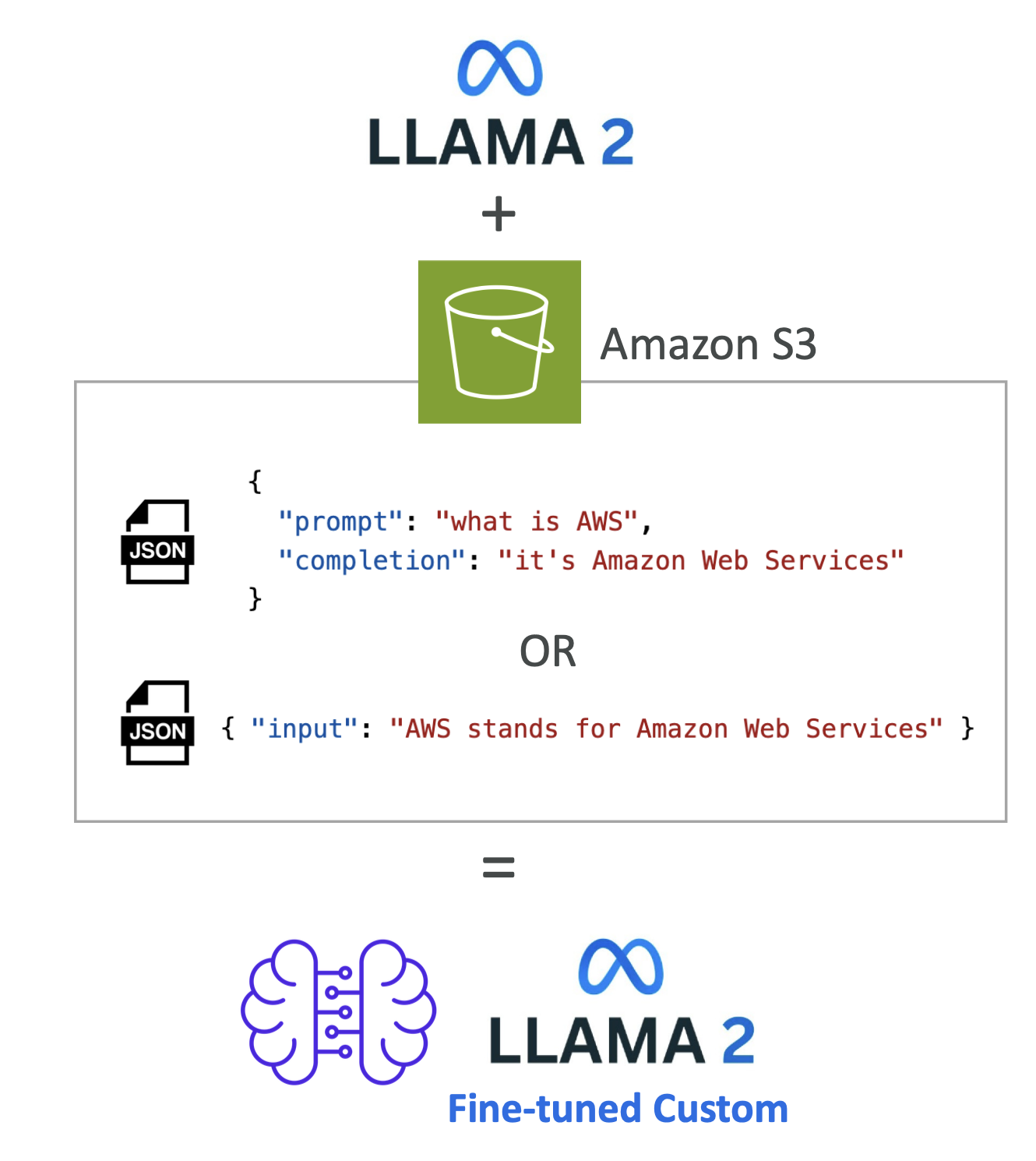

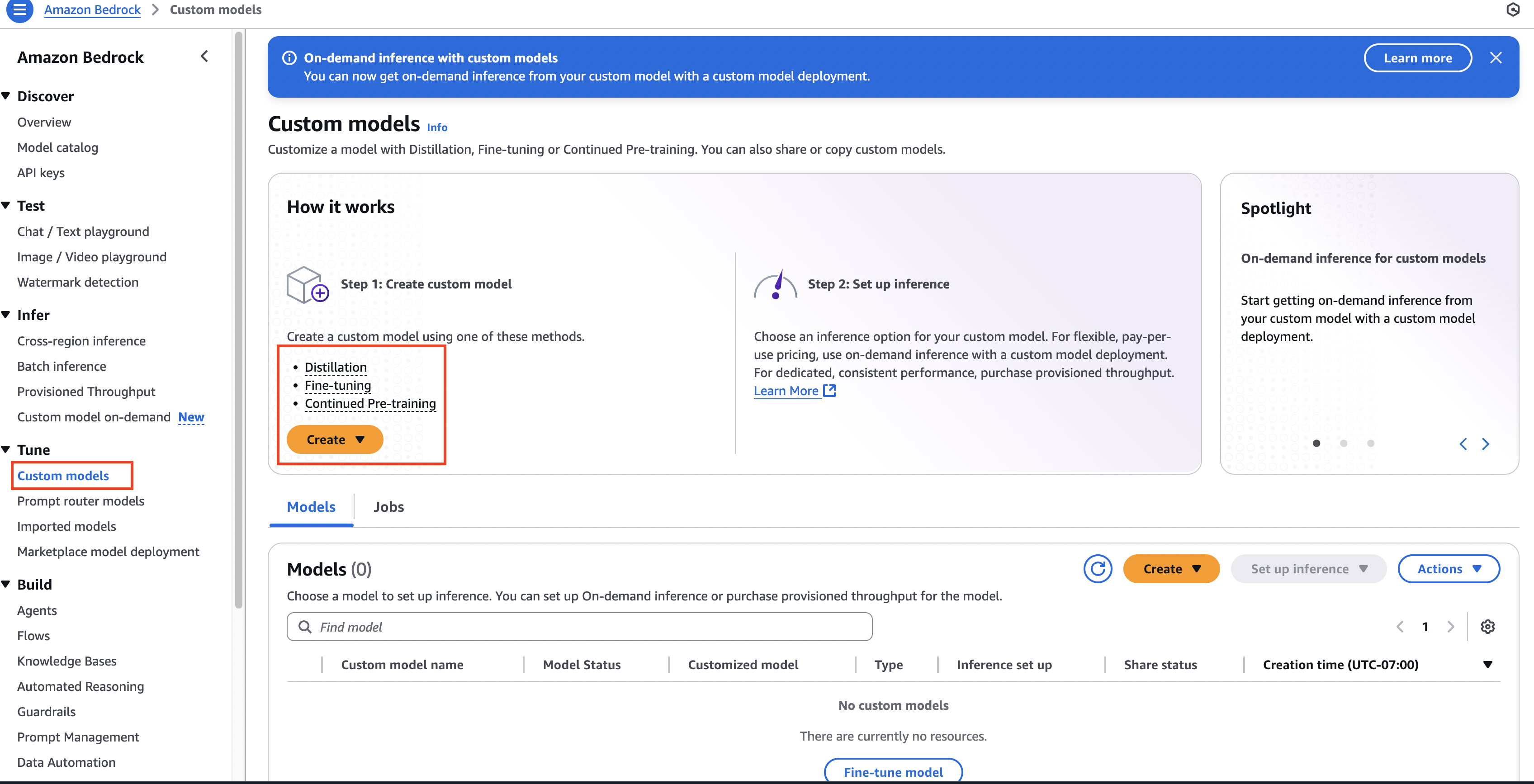

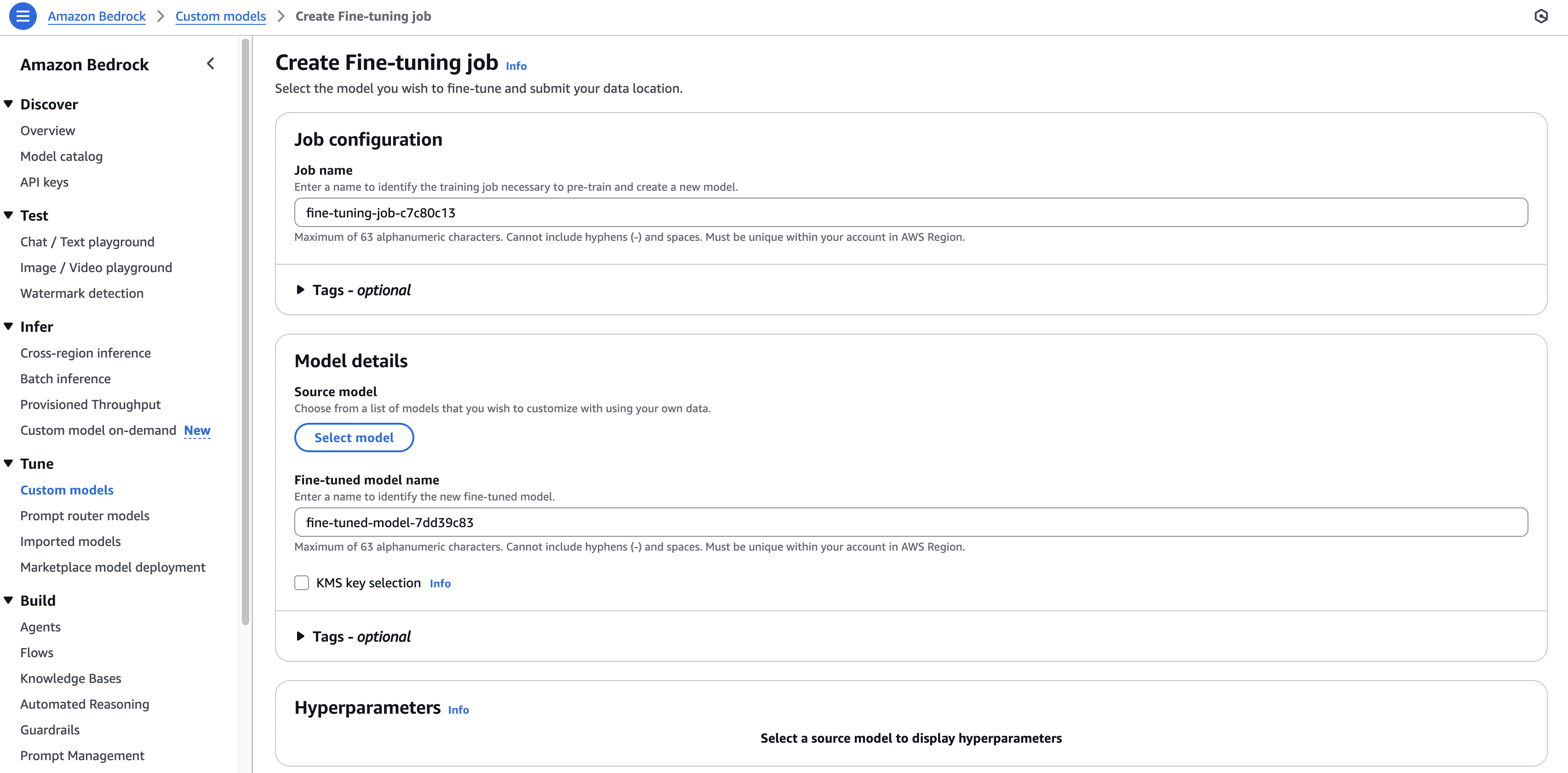

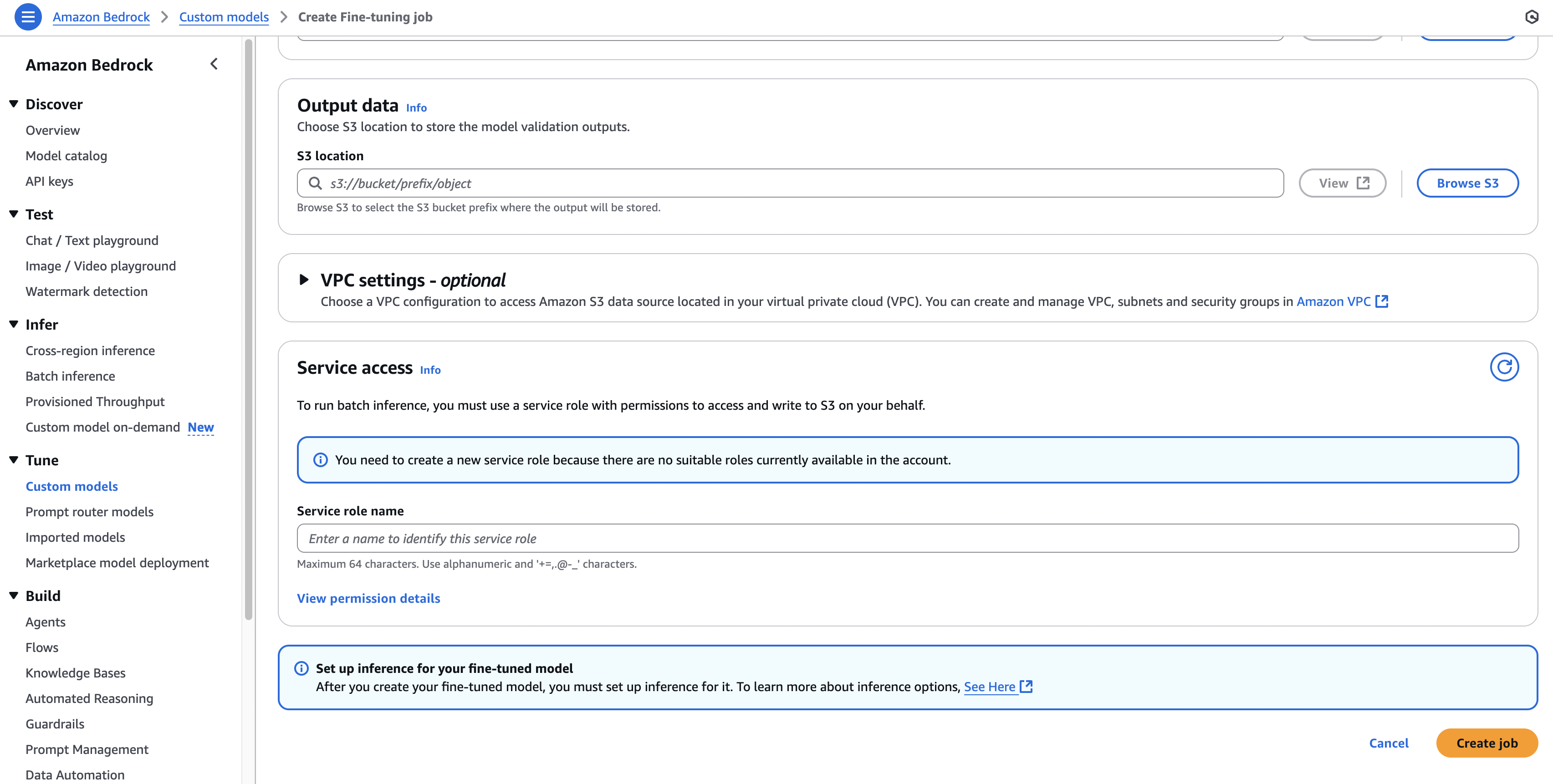

6. Fine-Tuning Requirements in Amazon Bedrock

- Training data must:

- Be in Amazon S3

- Follow specific formatting

- Provisioned Throughput is required for:

- Creating the custom model

- Using the custom model

- Not all models can be fine-tuned (usually open-source models are supported).

7. Common Use Cases

- Chatbot with specific persona, tone, or target audience

- Adding up-to-date knowledge

- Integrating exclusive private data (customer logs, internal documents)

- Improving categorization, accuracy, or response style

8. Exam Tips

- Keyword Mapping:

- “Labeled data” → Instruction-based Fine-Tuning

- “Unlabeled data” / “Domain adaptation” → Continued Pre-Training

- “Adapt to a new related task” → Transfer Learning

- Provisioned Throughput is a must for custom models in Bedrock.

- Fine-tuning changes weights of the base model → creates a private version.

- Compare models not just on quality, but also latency and token cost.

9. Good to know

- Re-training an FM requires a higher budget

- Instruction-based fine-tuning is usually cheaper as computations are less intense and the amount of data required usually less

- It also requires experienced ML engineers to perform the task

- You must prepare the data, do the fine-tuning, evaluate the model

- Running a fine-tuned model is also more expensive (provisioned throughput)

10. Provisioned Throughput (AWS Bedrock)

Definition:

Reserving a fixed amount of processing capacity for your custom (fine-tuned) model.

Why Needed

- Fine-tuned models run on dedicated resources.

- Ensures consistent speed, low latency, and predictable costs.

- Prevents slowdowns during high demand.

With vs Without

- Without: Performance can drop when traffic spikes.

- With: Guaranteed performance for the reserved capacity.

Exam Tip: For custom models in Bedrock, provisioned throughput is required.

All articles on this blog are licensed under CC BY-NC-SA 4.0 unless otherwise stated.