AWS Certified AI Practitioner(16) - Prompt Engineering Techniques

🎯 Prompt Engineering Techniques

Understanding different prompting techniques is essential for getting the most out of Large Language Models (LLMs). These concepts are also important for AWS certification exams, especially when dealing with Amazon Bedrock and generative AI.

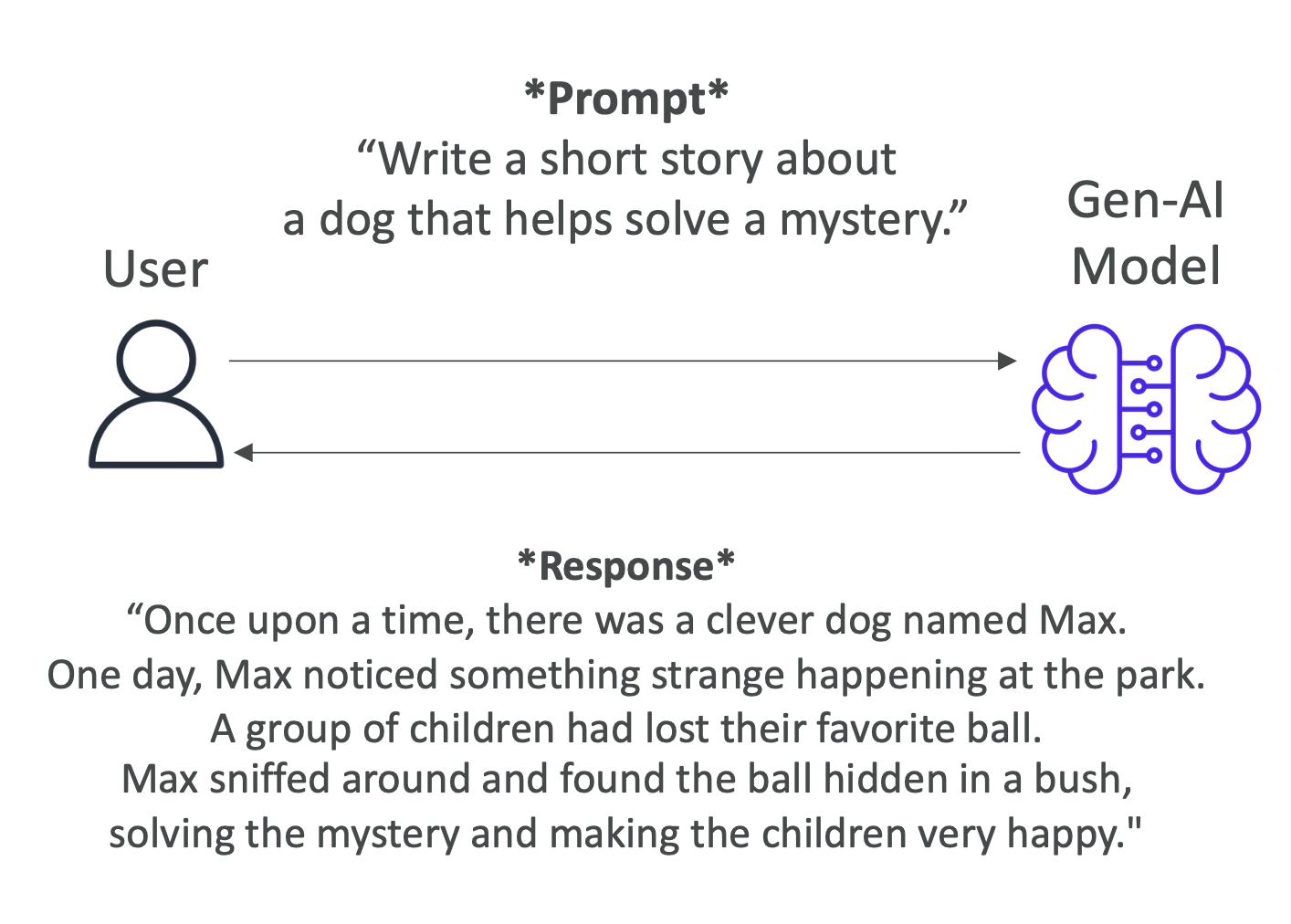

1. 🔹 Zero-Shot Prompting

Definition:

Present a task to the model without providing any examples or prior training for that specific task.

Prompt Example:

1 | Write a short story about a dog that helps solve a mystery. |

Response Example:

1 | Once upon a time, there was a clever dog named Max. One day, Max noticed something strange happening at the park... |

- Relies entirely on the model’s general knowledge.

- The larger and more capable the Foundation Model (FM), the better the results.

- Called zero-shot because the model receives no prior examples (“shots”).

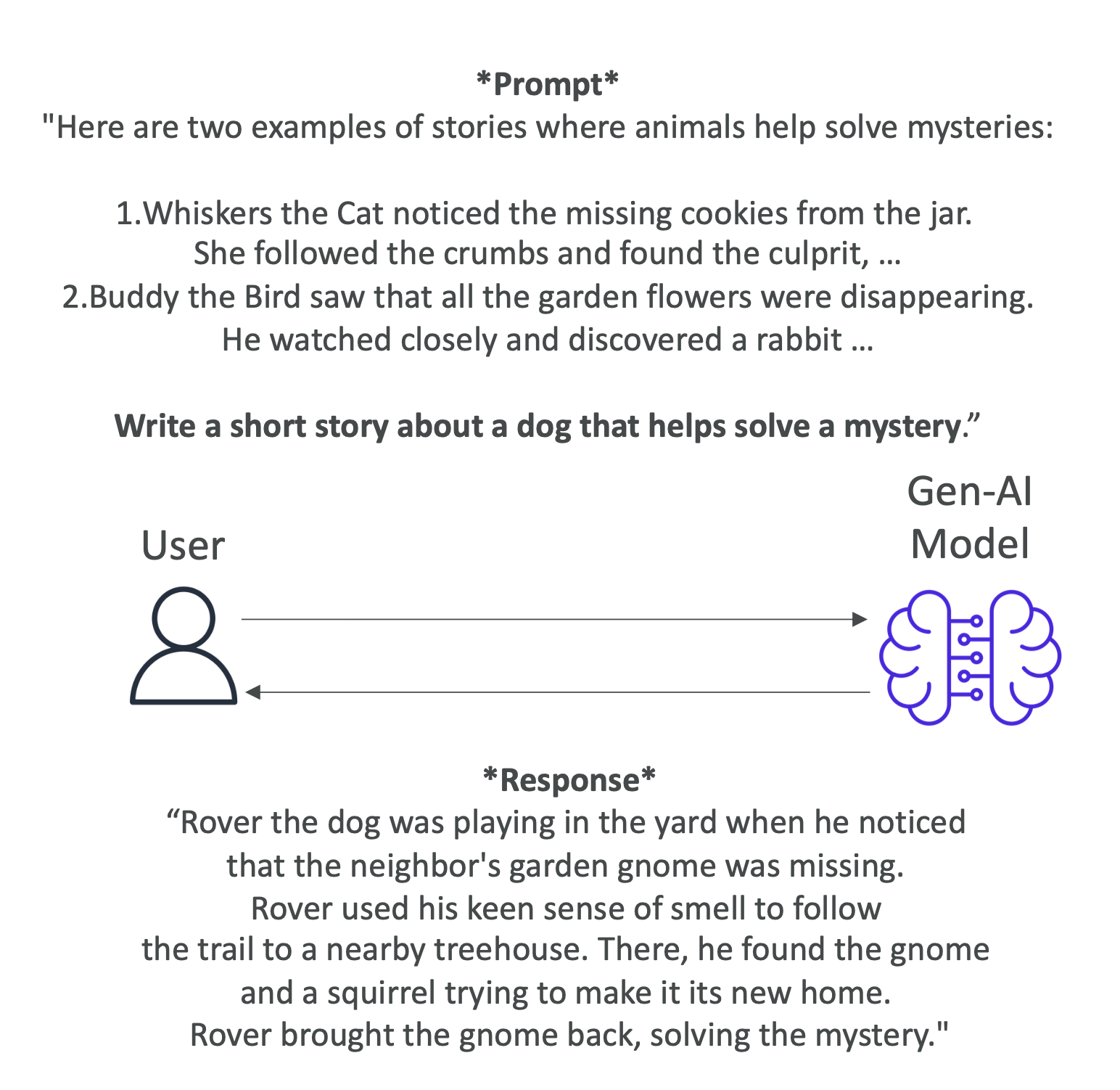

2. 🔹 Few-Shot Prompting

Definition:

Provide the model with a few examples to guide its output.

Prompt Example:

1 | Here are two examples of stories where animals help solve mysteries: |

Response Example:

1 | Rover the dog was playing in the yard when he noticed the neighbor’s garden gnome was missing... |

- Helps the model mimic the style and structure of given examples.

- One-Shot Prompting = providing only one example.

- Effective when you need consistent formatting or tone in the output.

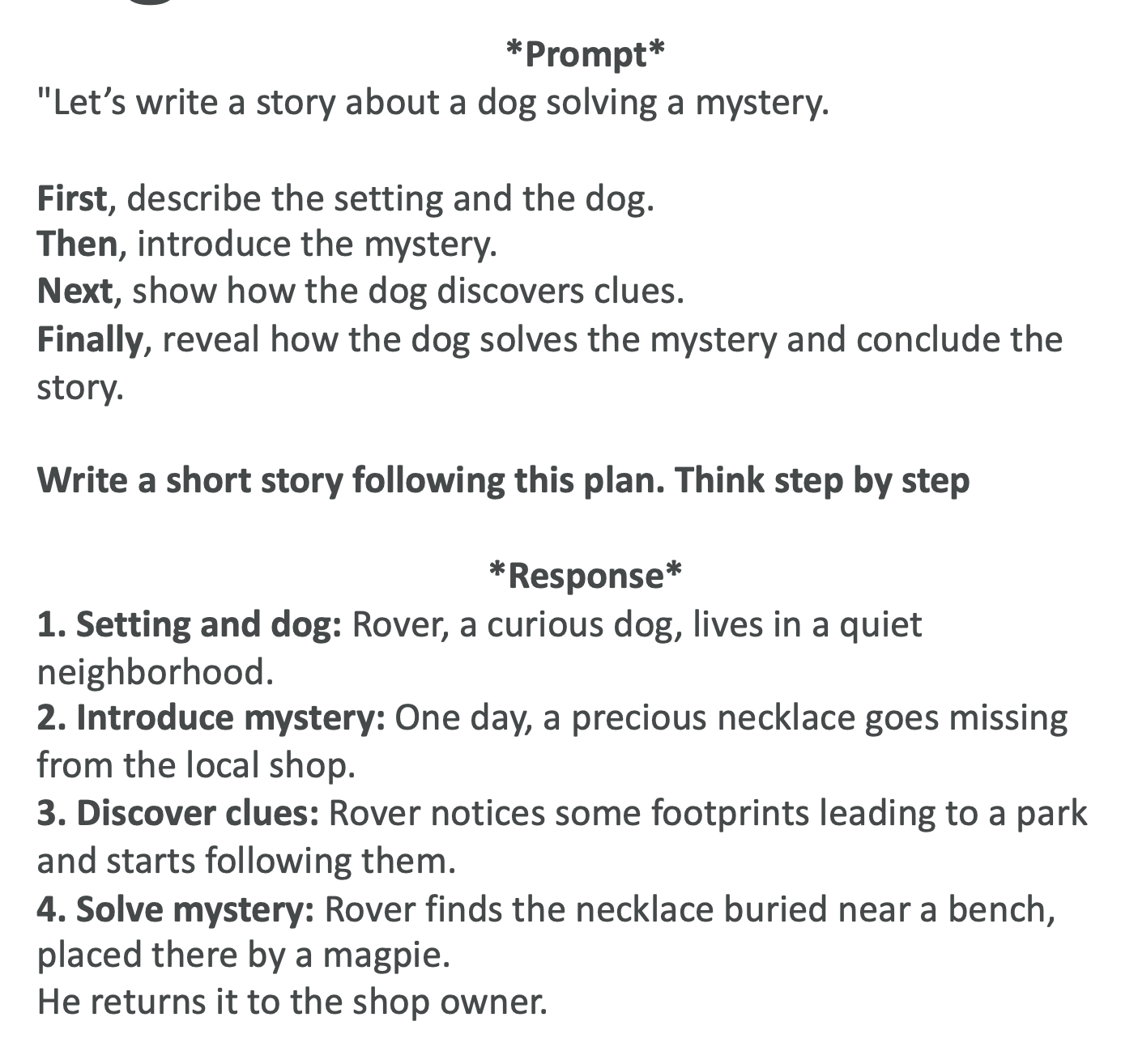

3. 🔹 Chain-of-Thought (CoT) Prompting

Definition:

Guide the model by dividing the task into step-by-step reasoning.

Prompt Example:

1 | Let’s write a story about a dog solving a mystery. |

Response Example:

- Rover, a curious dog, lives in a quiet neighborhood.

- One day, a necklace goes missing…

- Rover follows footprints to the park…

- He finds the necklace hidden by a magpie…

- Produces more structured and logical responses.

- Useful for problem-solving tasks like math, reasoning, or multi-step workflows.

- Can be combined with Zero-Shot or Few-Shot prompting.

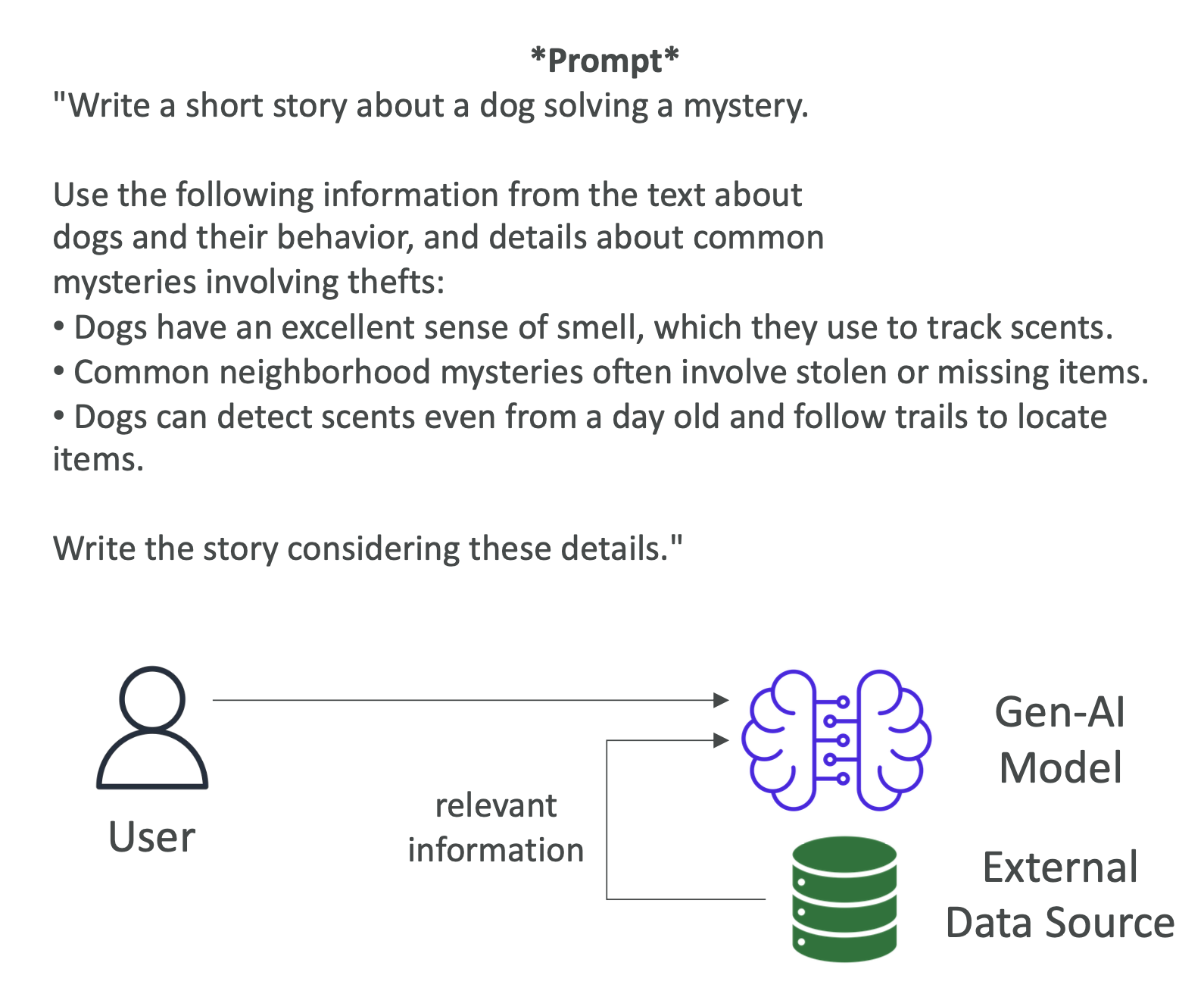

4. 🔹 Retrieval-Augmented Generation (RAG)

Definition:

Combine the model’s generative ability with external data sources to create more accurate, context-aware responses.

How it works:

- Query is sent to the model.

- Relevant information is retrieved from an external source (e.g., Amazon S3, vector DB).

- The retrieved data is augmented into the prompt.

- The model generates a context-rich answer.

Prompt Example:

1 | Write a short story about a dog solving a mystery. |

Response Example:

1 | Rover sniffed the ground and followed the trail of a missing toy hidden in a bush... |

- Ensures outputs are grounded in real or domain-specific knowledge.

- In AWS Bedrock, RAG is often implemented with a Knowledge Base and vector search (KNN).

📌 Exam Tips

- Zero-Shot = no examples, rely on model’s knowledge.

- Few-Shot = provide examples to guide responses (One-Shot = just one example).

- Chain-of-Thought = “Think step by step” for logical reasoning.

- RAG = augment with external data → ensures up-to-date and factual answers.

✅ Summary:

Prompt Engineering techniques allow you to control and optimize LLM behavior.

- Use Zero-Shot when you want quick general answers.

- Use Few-Shot for consistent style and formatting.

- Use CoT Prompting for structured, logical reasoning.

- Use RAG when accuracy and domain-specific knowledge are critical.