AWS Certified AI Practitioner(25) - Reinforcement Learning

🧠 Reinforcement Learning (RL) & RLHF

1. What is Reinforcement Learning (RL)?

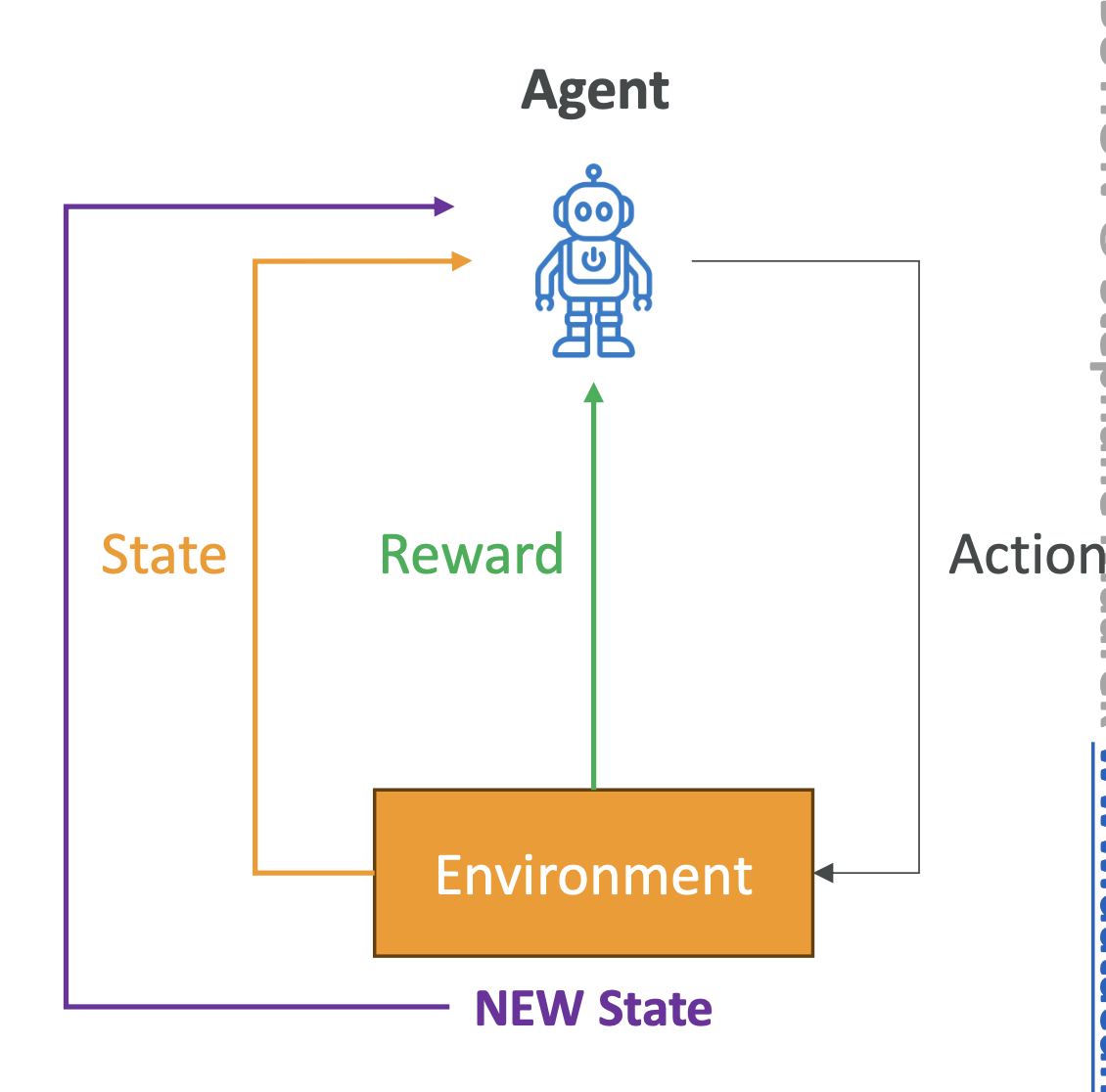

Reinforcement Learning is a type of machine learning where an agent

learns to make decisions by interacting with an environment and

maximizing rewards.

- Agent → the learner or decision-maker (e.g., a robot, software

bot).\ - Environment → the external system the agent interacts with

(e.g., a maze, stock market).\ - State → the current situation of the environment.\

- Action → the choice the agent makes.\

- Reward → feedback (positive or negative) from the environment.\

- Policy → the strategy the agent follows to decide its next

action.

👉 Exam Tip: RL is less common in AWS certification questions, but

you may see it in contexts like robotics, gaming, or reinforcement

learning from human feedback (RLHF) in generative AI.

2. How Does RL Work?

- The agent observes the current state.\

- It selects an action based on its policy.\

- The environment transitions to a new state and gives a

reward.\ - The agent updates its policy to improve future actions.

🎯 Goal: Maximize cumulative rewards over time.

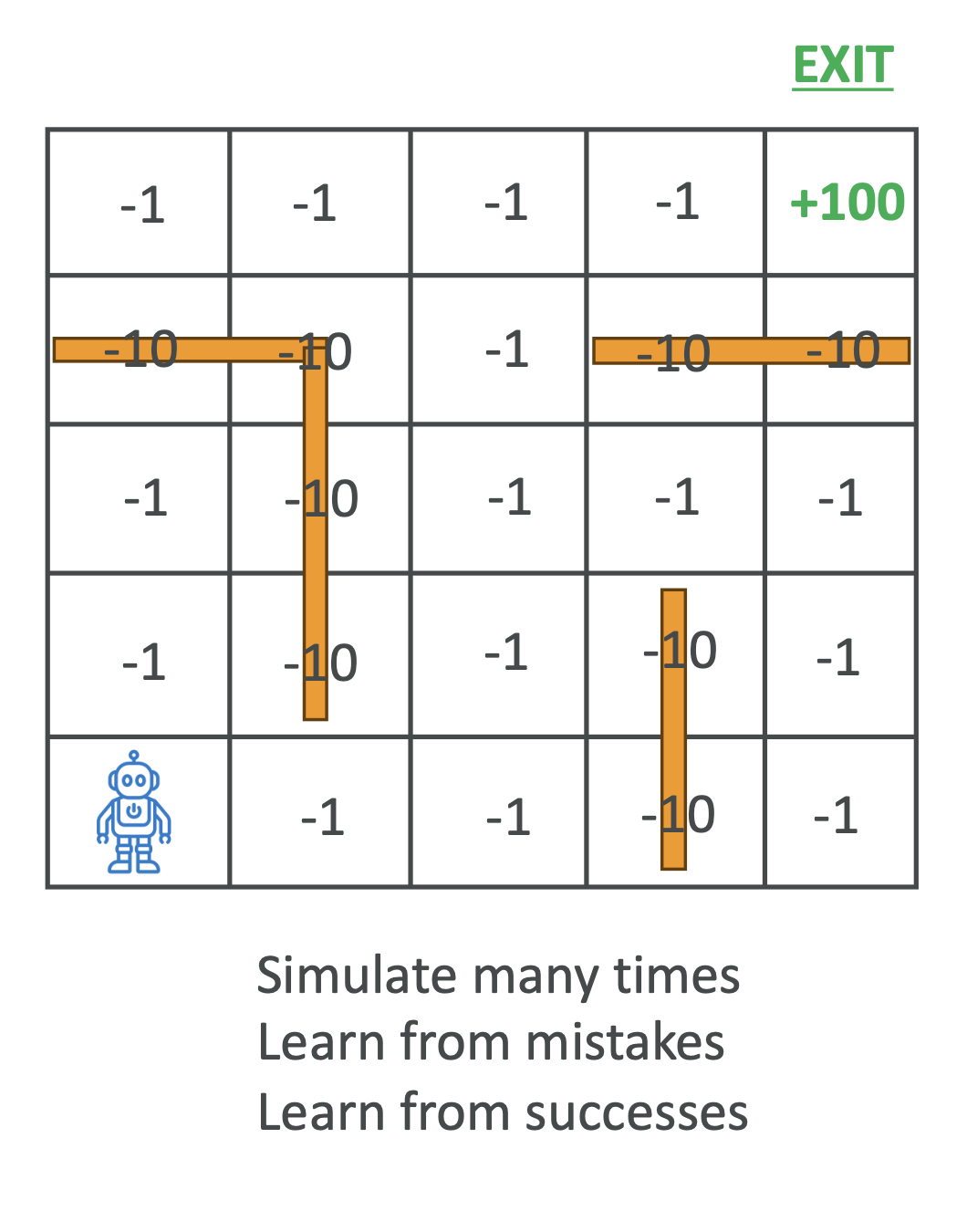

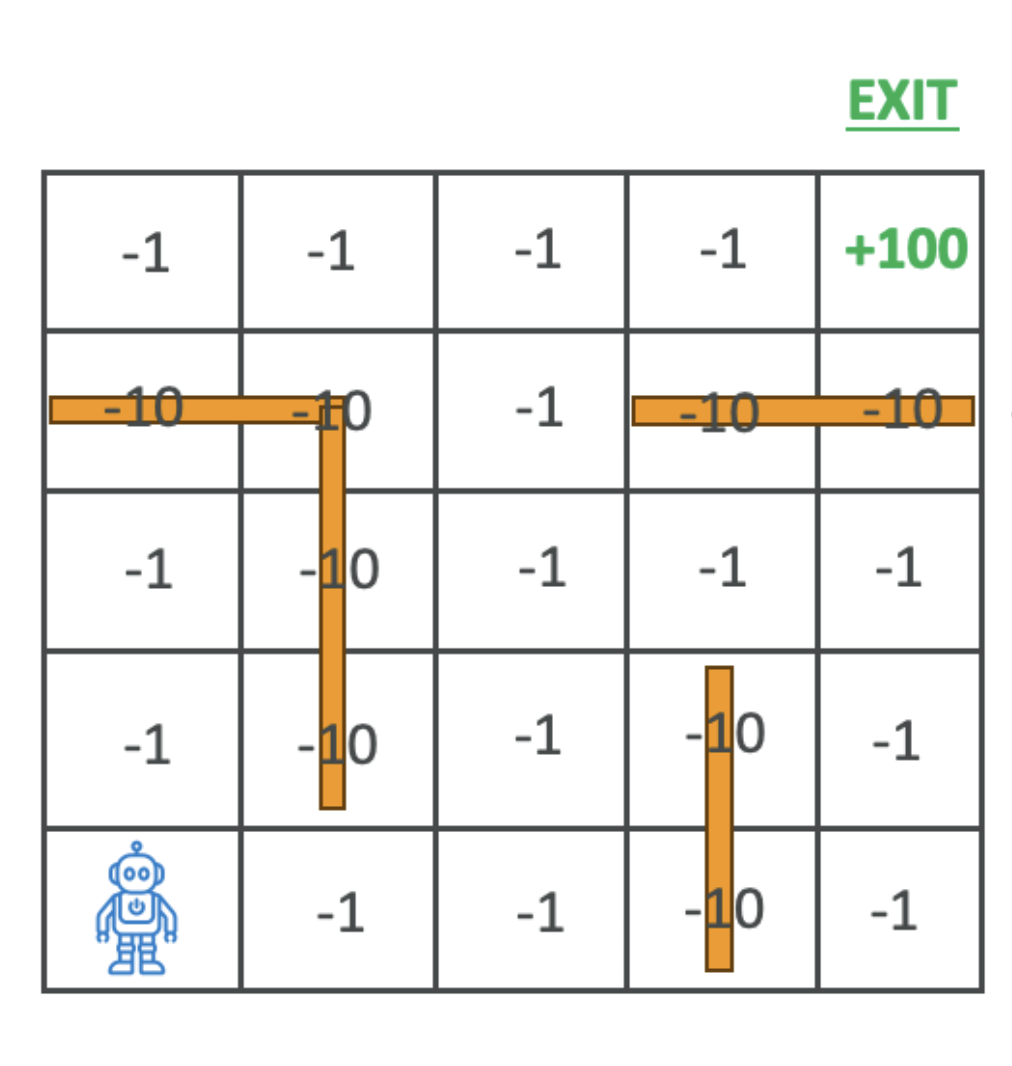

3. Example: Robot in a Maze

- Agent: Robot\

- Environment: Maze\

- Actions: Move up, down, left, right\

- Rewards:

-1for taking a step\-10for hitting a wall\+100for reaching the exit

👉 Over time, the robot learns the best path to the exit by trial and

error.

👉 Click the image or link to watch the video: AI Learns to Escape

4. Applications of Reinforcement Learning

- Gaming → Chess, Go, StarCraft\

- Robotics → navigation, object manipulation\

- Finance → portfolio management, trading strategies\

- Healthcare → personalized treatment recommendations\

- Autonomous Vehicles → path planning and decision-making

👉 Exam Tip: AWS exams might frame RL in autonomous systems or

AI training optimization contexts.

5. What is RLHF? (Reinforcement Learning from Human Feedback)

RLHF is widely used in Generative AI (like GPT models).

It combines reinforcement learning with human feedback to better

align AI with human goals.

- In standard RL, rewards are fixed (e.g., +100 for reaching the

exit).\ - In RLHF, humans help define the reward function by ranking

outputs.

Example:\

- Machine translation → “technically correct” vs. “natural-sounding”

translation.\ - Humans score the responses → model learns to prefer human-preferred

outputs.

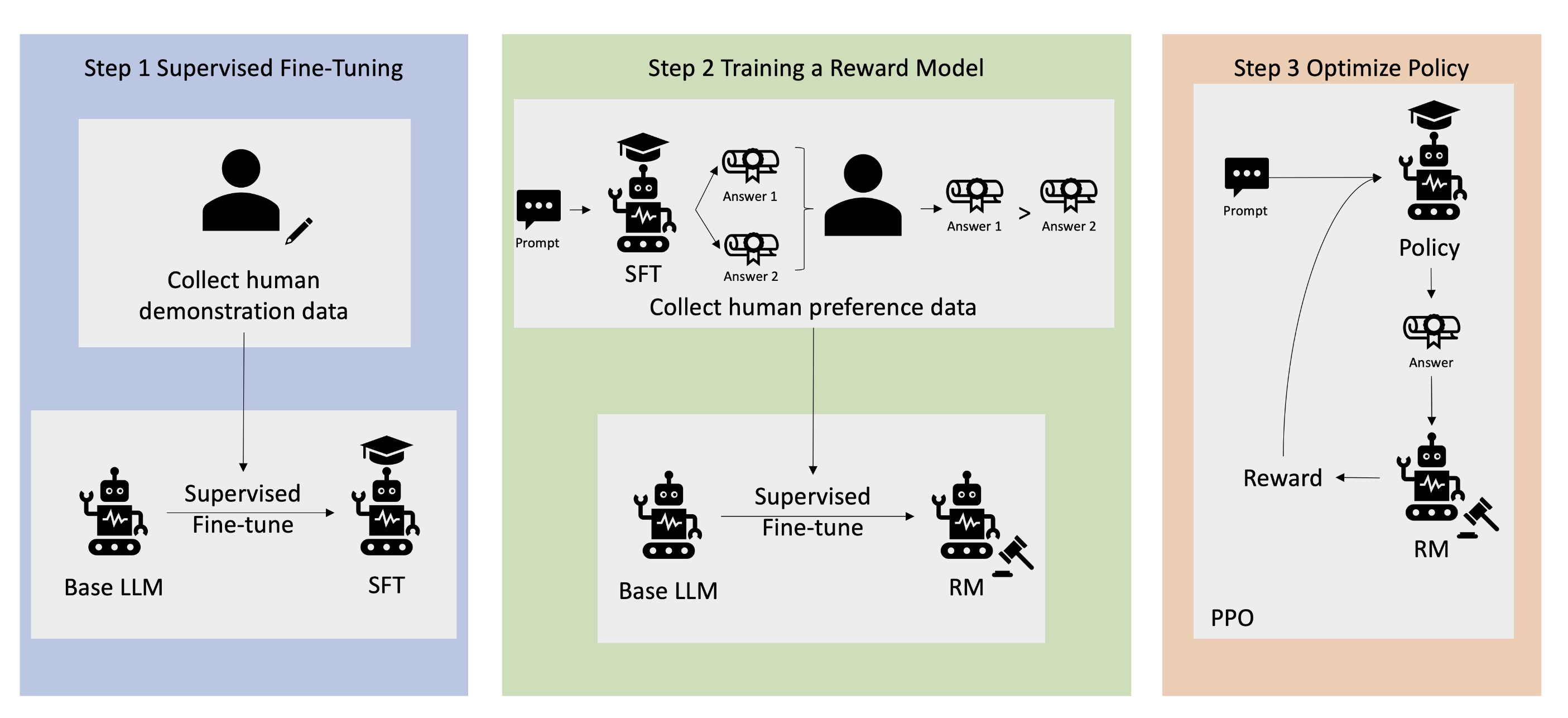

6. How Does RLHF Work?

- Data Collection → Create human prompts + responses.

- Example: “Where is the HR department in Boston?”\

- Supervised Fine-Tuning → Train a base model with labeled

responses.\ - Reward Model Creation → Humans rank multiple responses → AI

learns a reward model.\ - Optimization → Use the reward model to further train the base

model with reinforcement learning.

🔄 This process can be repeated and eventually automated.

7. Why is RLHF Important?

- Aligns AI systems with human preferences.\

- Used in LLMs (Large Language Models) like ChatGPT, Anthropic

Claude, and others.\ - Improves quality, safety, and usefulness of responses.

👉 Exam Tip:

If you see “human feedback” or “reward model”, the answer is

likely RLHF.

✅ Key Takeaways

- Reinforcement Learning (RL): Agent learns via trial and error to

maximize cumulative reward.\ - Applications: Games, robotics, finance, healthcare, autonomous

vehicles.\ - RLHF: Human feedback is added to the reward function → critical

in modern LLMs.\ - Exam Strategy: Focus less on math and more on concepts +

applications.