AWS Certified AI Practitioner(30) - Hyperparameter Tuning

Hyperparameter Tuning

1. What is a Hyperparameter?

- Definition: Settings that define how the model is structured and how the learning algorithm works.

- Set before training begins (they are not learned from the data).

- Examples:

- Learning rate

- Batch size

- Number of epochs

- Regularization

👉 Exam Tip: Hyperparameters are not learned during training. They are chosen before training and tuned for best performance.

2. Why Hyperparameter Tuning Matters

- Goal: Find the best combination of hyperparameters to optimize model performance.

- Benefits:

- Improves accuracy

- Reduces overfitting

- Enhances generalization to new data

- Methods:

- Grid Search: Tries all possible parameter combinations.

- Random Search: Tests random parameter values.

- Automated Services:

- Amazon SageMaker Automatic Model Tuning (AMT) runs multiple training jobs and finds the best settings.

3. Key Hyperparameters

(1) Learning Rate

- Controls how big the steps are when updating model weights.

- High learning rate: Faster training, but may overshoot the optimal solution.

- Low learning rate: More stable and precise, but much slower.

(2) Batch Size

- Number of training examples processed in one iteration.

- Small batches: More stable, but slower.

- Large batches: Faster, but may cause less stable updates.

(3) Number of Epochs

- How many times the model goes through the entire training dataset.

- Too few: Underfitting (model doesn’t learn enough).

- Too many: Overfitting (model memorizes the data, performs poorly on new data).

(4) Regularization

- Controls the balance between a simple and complex model.

- More regularization → less overfitting.

👉 Exam Tip: If asked how to reduce overfitting, increasing regularization is often the correct answer.

4. Overfitting

What is it?

- The model performs very well on training data but poorly on new, unseen data.

Causes

- Too little training data → not representative.

- Training for too many epochs.

- Model too complex → learns noise instead of patterns.

Solutions

- Increase training data size (best option).

- Use early stopping (stop training before overfitting).

- Apply data augmentation (add diversity to training data).

- Adjust hyperparameters (e.g., increase regularization, change batch size).

👉 Exam Tip: If the question is “best way to prevent overfitting”, the answer is usually increase training data.

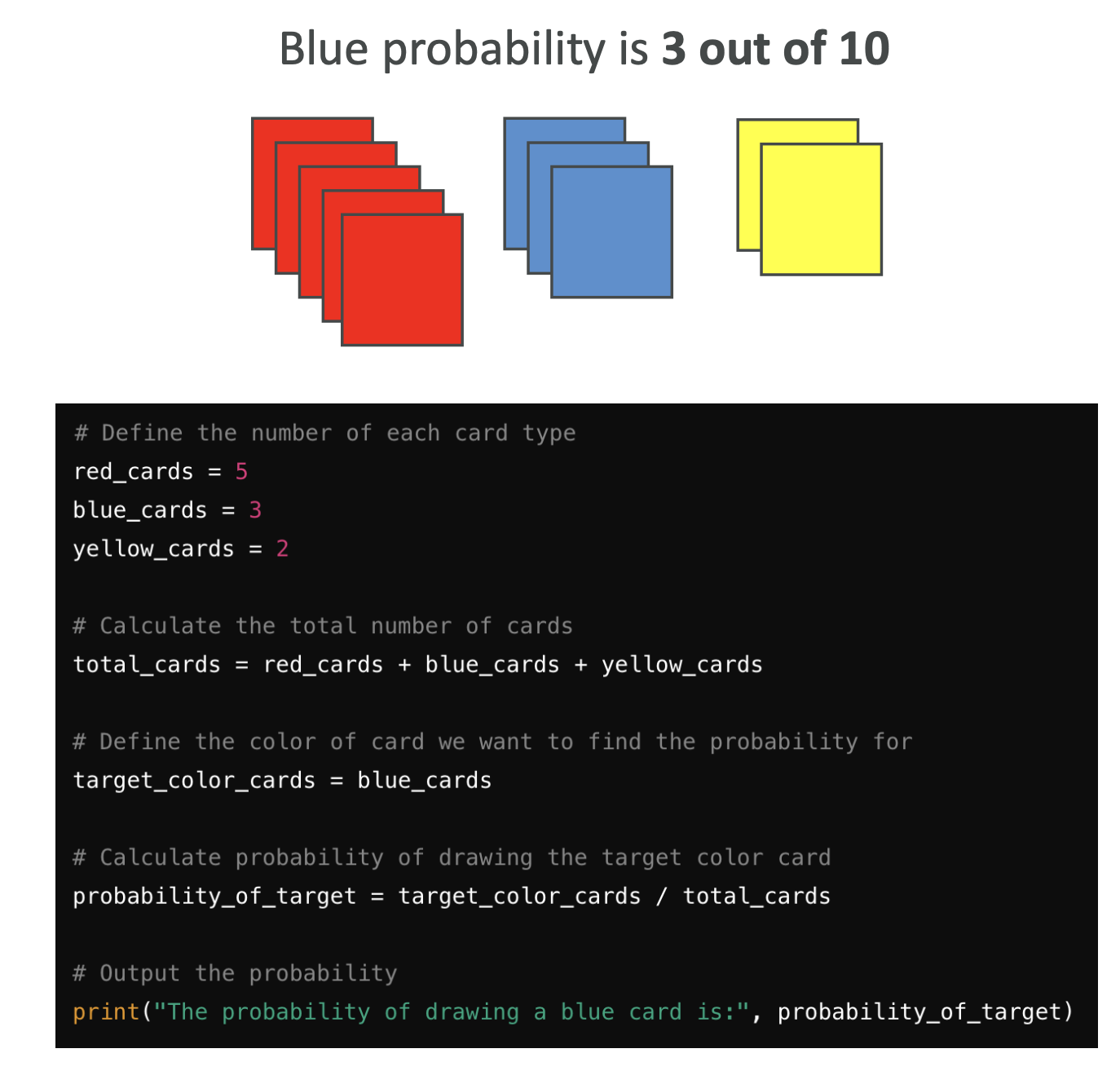

5. When NOT to Use Machine Learning

- Example:

You have a deck of 10 cards (5 red, 3 blue, 2 yellow).

Q: What is the probability of drawing a blue card?

A: 3/10 = 0.3

This is a deterministic problem:

- The exact answer can be computed mathematically.

- Writing simple code is the best solution.

If we used ML (supervised, unsupervised, or reinforcement learning), we’d only get an approximation, not an exact result.

👉 Exam Tip:

Machine Learning is not appropriate for problems that have a clear, deterministic answer. It is designed for problems where patterns must be learned from data.

6. AWS-Specific Notes for Exams

- Amazon SageMaker Automatic Model Tuning (AMT): Automates hyperparameter tuning by running multiple jobs in parallel.

- Common Exam Questions:

- How to fix overfitting → Increase data / regularization.

- What hyperparameter affects convergence speed → Learning rate.

- Which AWS service automates tuning → SageMaker AMT.

- When NOT to use ML → Deterministic problem with exact answers.

✅ Summary - Hyperparameters (learning rate, batch size, epochs regularization) must be tuned for best performance.

- Tuning improves accuracy, reduces overfitting, and enhances generalization.

- Overfitting occurs when the model memorizes training data → fix by more data, regularization, early stopping.

- ML is not appropriate for deterministic problems.

- On AWS, SageMaker AMT is the go-to tool for automated hyperparameter tuning.