AWS Certified AI Practitioner(35) - EC2

Amazon EC2 (Elastic Compute Cloud)

What it is

- EC2 = Elastic Compute Cloud → AWS’s most popular IaaS (Infrastructure as a Service) offering.

- With EC2, you can:

- Rent virtual machines (instances).

- Attach storage with EBS (Elastic Block Store) or EFS.

- Distribute traffic with ELB (Elastic Load Balancing).

- Scale automatically with Auto Scaling Groups (ASG).

- Why it matters: Understanding EC2 is fundamental to understanding the AWS Cloud.

EC2 Sizing & Configuration Options

When launching an EC2 instance, you configure:

- Operating System (OS): Linux, Windows, or macOS.

- CPU: Number of vCPUs and compute power.

- Memory (RAM): Amount of memory allocated.

- Storage Options:

- Network-attached storage: EBS or EFS.

- Instance Store (local hardware): Temporary but very fast.

- Networking: Network card bandwidth, public IP address.

- Firewall: Security Groups control inbound/outbound traffic.

- Bootstrap Scripts: EC2 User Data can configure the instance at first launch (e.g., install packages, run setup commands).

💡 Exam Tip:

- Security Groups = virtual firewalls.

- EC2 User Data = automation at first boot.

Amazon’s Hardware for AI & ML

For machine learning workloads, AWS offers specialized EC2 families and custom silicon:

GPU Instances

- P-series (P3, P4, P5): Optimized for deep learning training.

- G-series (G3–G6): Graphics, inference, and virtual desktops.

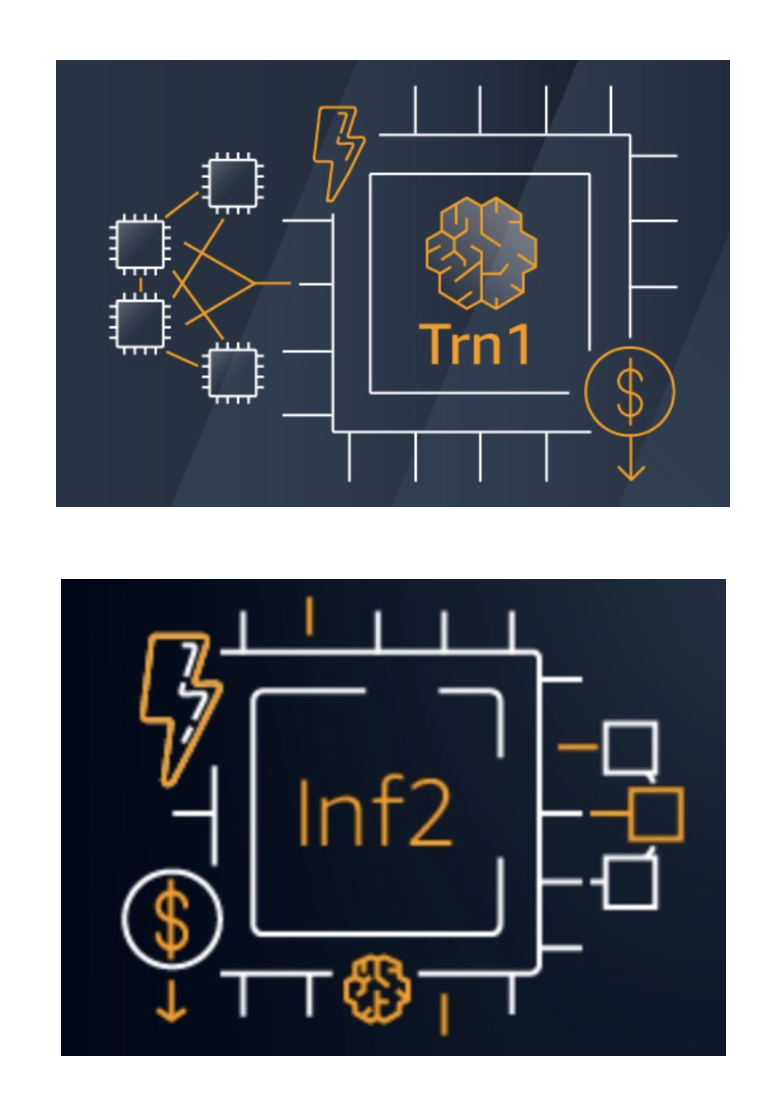

AWS Trainium

- Custom ML training chip designed for deep learning models with 100B+ parameters.

- Trn1 instances have up to 16 Trainium accelerators.

- 50% lower training cost compared to GPU-based instances.

AWS Inferentia

- Custom inference chip optimized for performance and cost.

- Powers Inf1 and Inf2 instances.

- Provides up to 4x throughput and 70% cost savings over GPUs.

👉 Exam Tip:

- Trainium (Trn1) = Training at scale, lower cost.

- Inferentia (Inf1/Inf2) = High-performance, low-cost inference.

- Both have lowest environmental footprint → “green AI hardware.”

Key Takeaways for the Exam

- EC2 = Virtual servers in the cloud.

- Storage: EBS/EFS for persistent storage, Instance Store for temporary high-speed storage.

- ELB & ASG: Scaling and load balancing.

- Security Group: Acts as firewall.

- User Data: Automates setup at instance launch.

- AI Workloads:

- GPU Instances (P, G series) for ML/DL.

- Trainium (Trn1) for training.

- Inferentia (Inf1/Inf2) for inference.

💡 Common Exam Question Patterns:

- “Which instance type is best for large-scale ML model training?” → Trn1 (Trainium).

- “Which service provides cost-effective inference at scale?” → Inferentia.

- “Where do you configure startup scripts for EC2?” → EC2 User Data.

All articles on this blog are licensed under CC BY-NC-SA 4.0 unless otherwise stated.