AWS Certified AI Practitioner(39) - Responsible AI, Security, Governance, and Compliance

Responsible AI, Security, Governance, and Compliance

This section is less about building models and more about ensuring trust, safety, and compliance when deploying AI. While it may feel text-heavy, it’s very important for the AWS AI certification exam. Let’s go step by step.

Responsible AI

Definition: Responsible AI ensures that AI systems are transparent, trustworthy, and beneficial to society. It reduces risks and negative outcomes across the entire AI lifecycle:

- Design → Development → Deployment → Monitoring → Evaluation

Key Dimensions of Responsible AI

- Fairness – Promote inclusion and prevent discrimination.

- Explainability – Ensure humans can understand why a model made a decision.

- Privacy & Security – Individuals must control when and how their data is used.

- Transparency – Clear visibility into how models operate and their limitations.

- Veracity & Robustness – Models should remain reliable even in unexpected scenarios.

- Governance – Define, implement, and enforce responsible AI practices.

- Safety – AI should benefit individuals and society, minimizing harm.

- Controllability – Ensure models can be aligned with human values and intent.

👉 Exam Tip: Expect questions about bias detection, explainability vs. interpretability, and fairness in AI systems.

Security

For AI systems, security must uphold the CIA triad:

- Confidentiality – Data is protected from unauthorized access.

- Integrity – Data and model predictions are reliable and unchanged.

- Availability – AI services are accessible when needed.

This applies not just to data, but also to infrastructure and organizational assets.

Governance & Compliance

- Governance – Defines policies, oversight, and processes to align AI with legal and regulatory requirements, while improving trust.

- Compliance – Ensures adherence to regulations (critical in healthcare, finance, and legal sectors).

👉 Exam Tip: If a question mentions regulations, risk management, or improving trust, the answer usually relates to governance and compliance.

AWS Services for Responsible AI

AWS provides multiple tools to implement responsible AI:

Amazon Bedrock

- Human or automated model evaluation.

- Guardrails: block harmful content, filter undesirable topics, redact PII (Personally Identifiable Information).

SageMaker Clarify

- Evaluate models for accuracy, robustness, and toxicity.

- Detect bias (e.g., data over-representing middle-aged groups).

SageMaker Data Wrangler

- Fix dataset bias (e.g., use data augmentation for underrepresented groups).

SageMaker Model Monitor

- Monitor model quality in production, detect drift, and trigger alerts.

Amazon Augmented AI (A2I)

- Human review of low-confidence model predictions.

Governance Tools

- Model Cards – Document model details (intended use, risks, training data).

- Model Dashboard – View and track all models in one place.

- Role Manager – Define access controls for different personas (e.g., data scientist vs. MLOps engineer).

AWS AI Service Cards

- Official documentation that describes intended use cases, limitations, and best practices for responsible AI.

Interpretability vs. Explainability

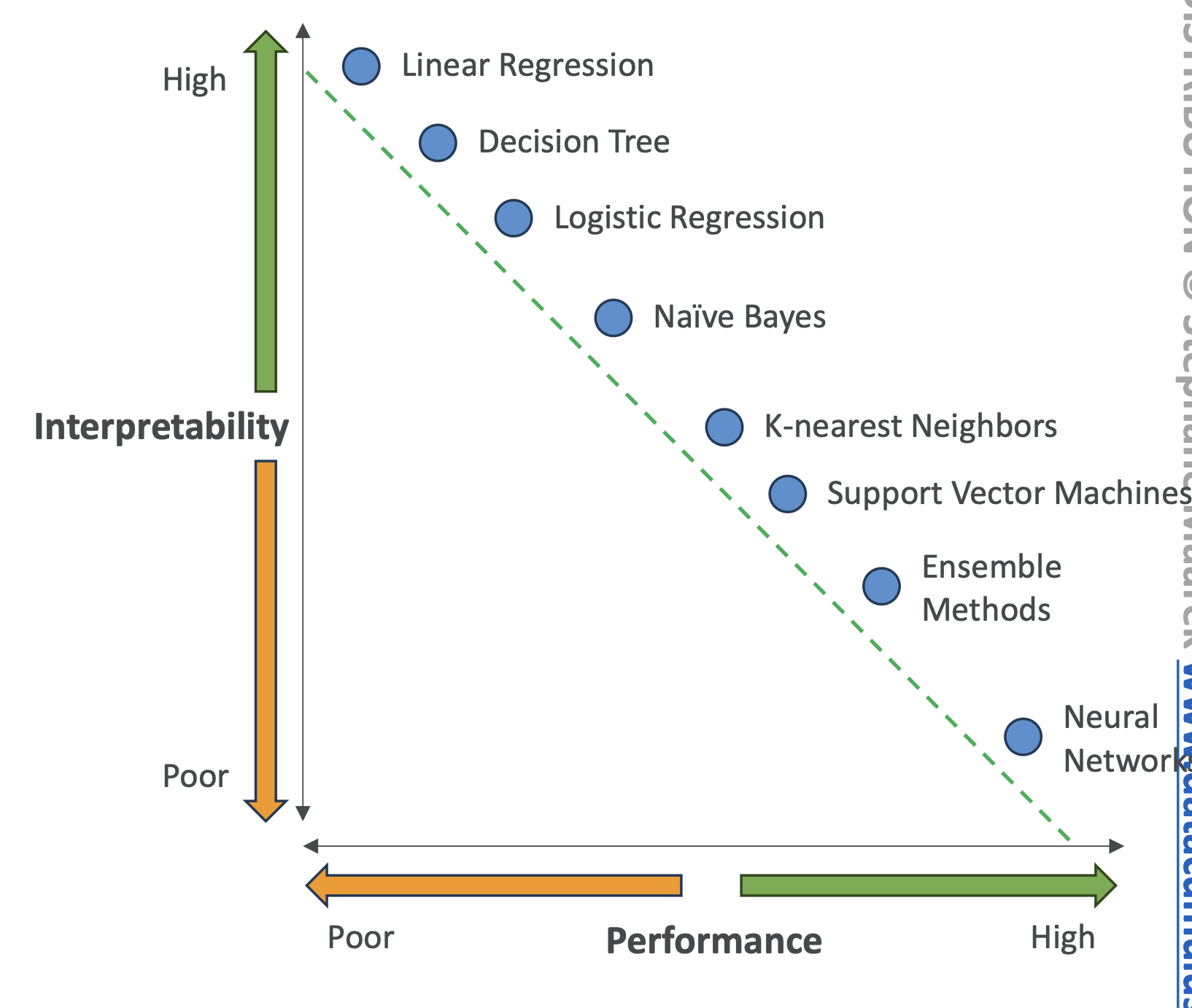

Interpretability

- The degree to which a human can understand the cause of a model’s decision.

- High interpretability = models are transparent but often less powerful.

- Trade-off:

- Linear regression = high interpretability, low performance.

- Neural networks = low interpretability, high performance.

Explainability

- Explains inputs and outputs without knowing exactly how the model works.

- Example: “Given income and credit history, the model predicts loan approval.”

- Often enough for compliance and trust, even if the model itself is complex.

👉 Exam Tip: If a question mentions “why and how” → interpretability, but if it says “explain results to stakeholders” → explainability.

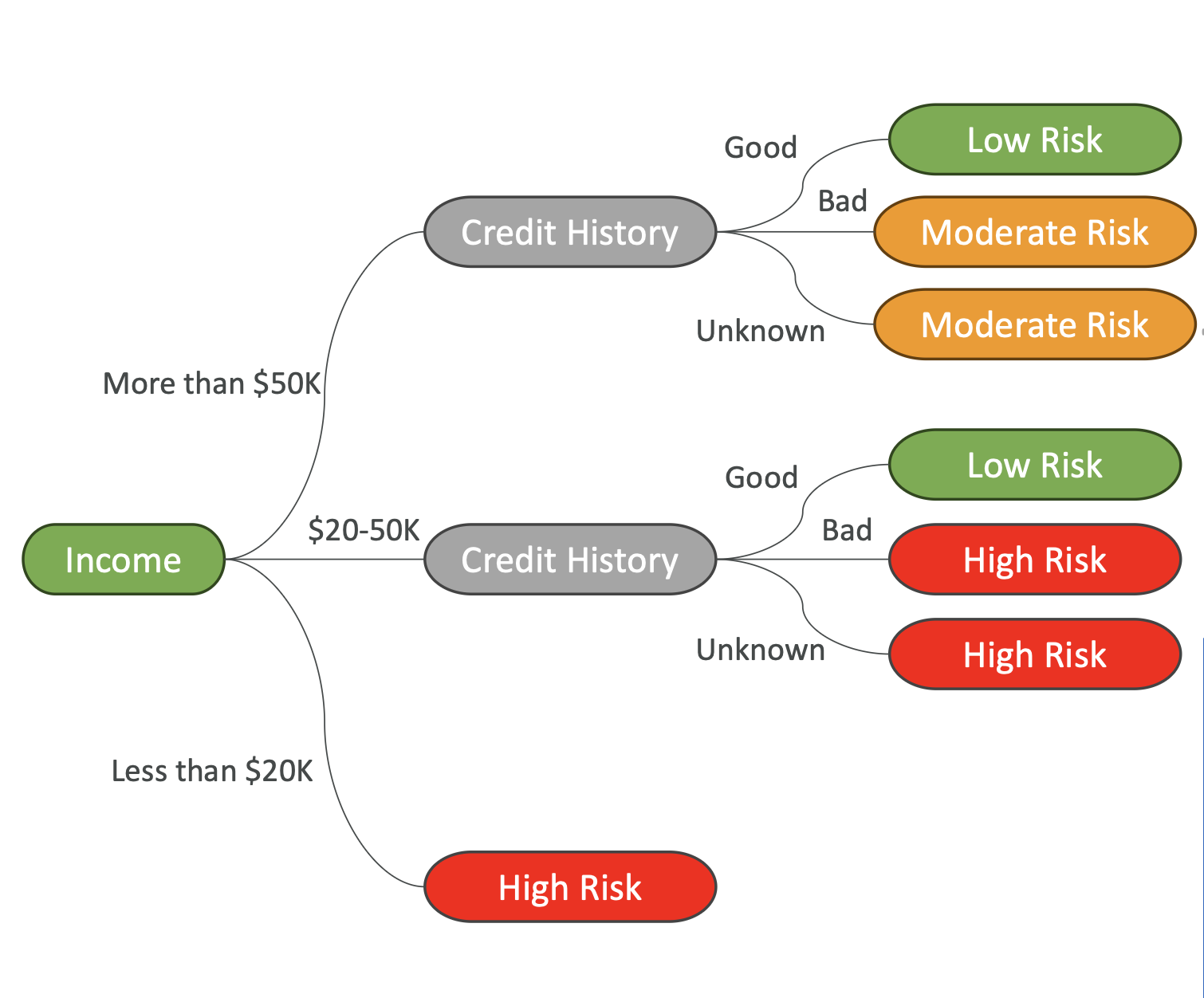

High Interpretability Models

- Decision Trees

- Simple, rule-based splits (e.g., “Is income > $50K?”).

- Easy to interpret and visualize.

- Risk: prone to overfitting if too many branches.

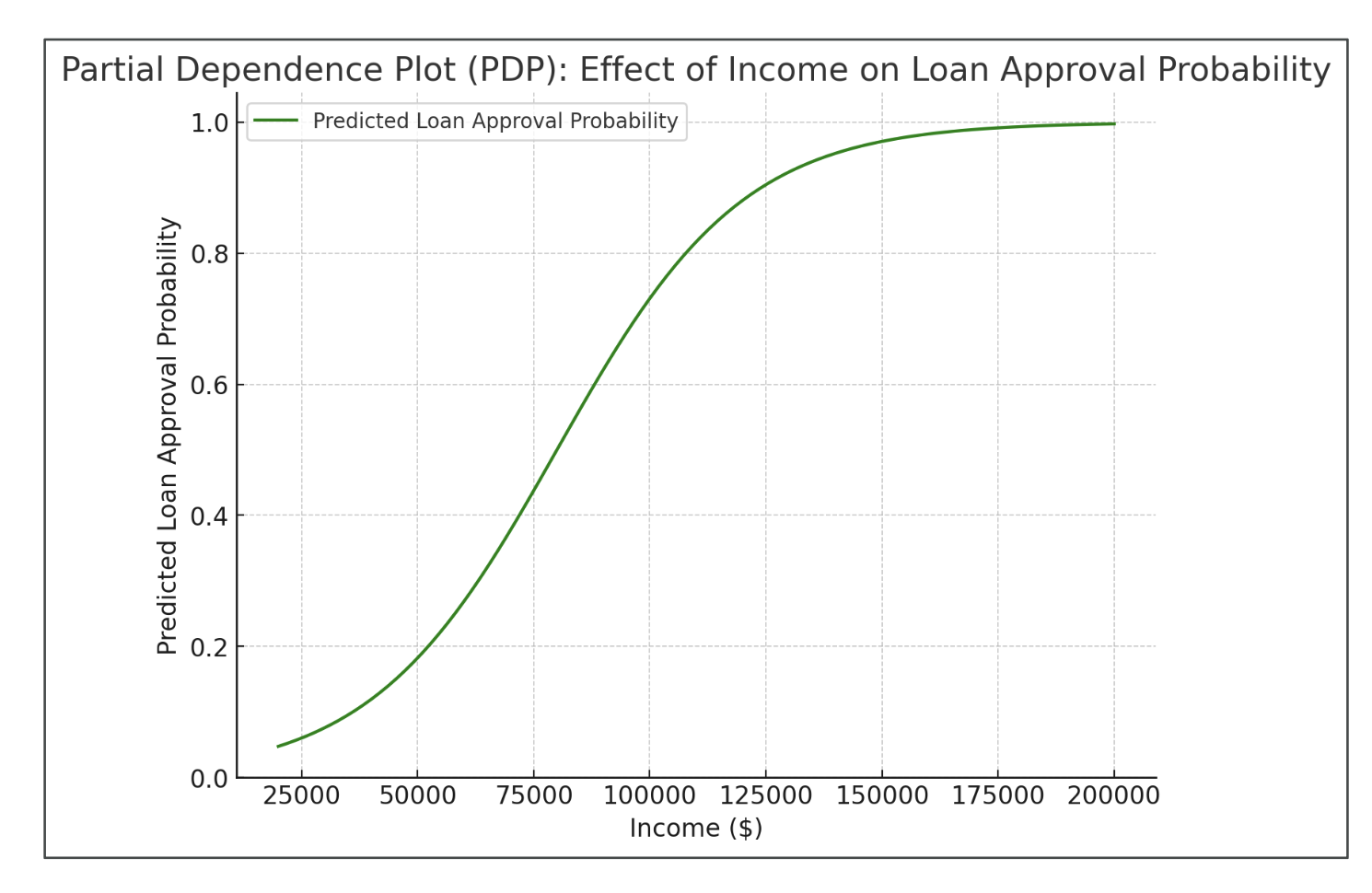

Tools for Black-Box Models

- Partial Dependence Plots (PDPs)

- Show how a single feature impacts predictions while holding others constant.

- Useful for black-box models like neural networks.

- Example: income vs. probability of loan approval.

Human-Centered Design (HCD) for AI

AI should be designed with human needs first:

- Amplify decision-making – Especially in stressful environments (e.g., healthcare).

- Clarity & simplicity – Easy-to-use AI interfaces.

- Bias mitigation – Train decision-makers to recognize bias and ensure datasets are balanced.

- Human + AI learning

- Cognitive apprenticeship: AI learns from human experts.

- Personalization: adapt to user needs.

- User-centered design – Accessible to a wide variety of users.

Key Takeaways for the Exam

- Responsible AI = fairness, transparency, explainability, bias mitigation.

- Security = confidentiality, integrity, availability.

- Governance = policies + oversight.

- Compliance = following regulations.

- AWS Tools to Know: Bedrock Guardrails, SageMaker Clarify, Data Wrangler, Model Monitor, A2I, Model Cards, Model Dashboard, Role Manager.

- Trade-off: High interpretability → low performance, and vice versa.

- Decision Trees & PDPs = ways to improve explainability.

- HCD ensures AI is human-friendly and trustworthy.